Friday, September 19, 2025

By Tom Li, Daniel Weng and Rachel Kim

Contaminated site investigators and remediation professionals aren’t necessarily artificial intelligence (AI) experts or even AI enthusiasts. But writing and reviewing environmental site assessment reports, advising and executing remediations on brownfield sites across Canada is a part of the job – and the advent of AI presents exciting opportunities to transform how we approach data-intensive tasks.

As environmental engineers we are excited to explore and recognize the potential of using AI to streamline the preparation of reports that follow established templates and are repetitive in nature. As a result, we’re pleased to share our team’s experience in developing and implementing an AI-assisted solution to support the preparation of Phase 1 Environmental Site Assessment (ESA) reports, highlighting the resources required, challenges faced, and lessons learned along the way.

Overview of ESAs

A Phase 1 ESA is primarily a desktop background review of a property to determine if there are potential contaminating activities from current or historical uses, based on observations and documentation. Its purpose is to identify areas of potential environmental concern and assess whether further intrusive investigation is needed to determine if a site is contaminated. These reports generally require reviewing publicly available records and client-provided documentation, conducting site visits, and interviewing individuals with knowledge of the current and historic operations at the property.

They are critical in our industry, but Phase 1 ESAs have become somewhat commoditized, often leading to tight budgets and timelines. They require attention to detail and are a source of high commercial liability, as missing or misinterpreting records can lead to samples not being collected at the right locations (or at all) and can lead to a client and/or other stakeholders making decisions on a property without discovering contamination. Such work is often allocated to junior and intermediate staff to control costs or limit losses. Many consultants in the industry write Phase 1 ESAs at low cost to get the opportunity to conduct Phase 2 ESA investigations and potential future remediation work with clients. Our environmental team writes over 50 Phase 1 ESAs a year.

One particularly time-consuming aspect of Phase 1 ESAs involves reviewing a raw-data document that is provided by a third-party vendor (ERIS report) and is a comprehensive list of environmental records within 250 m of the site, including spills, industrial operations, and the presence of fuel storage tanks.

In many urban areas, there can be thousands of pages of records that an environmental professional must evaluate. The manual review process is not only time-consuming, but also subject to human error and is constrained by the availability of qualified staff.

Leveraging AI to automate portions of this work provides an opportunity to improve efficiency, accuracy, and profitability while allowing our environmental practitioners to focus their expertise where it matters most.

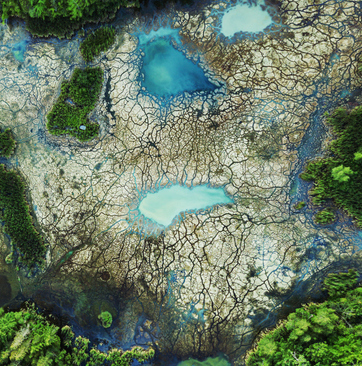

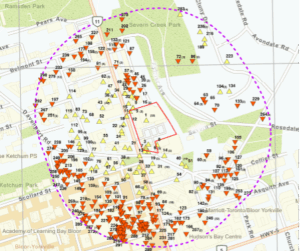

ERIS (Environmental Risks Information Services) Report with 700+ records in an urban area.

Evaluating the AI options

At first glance, using a publicly available generative AI chatbot might seem like an easy solution. However, several critical factors prevent their use in our specialized context. Phase 1 ESAs demand an interpretation of text and graphical data, technical precision, and professional judgment that generic chatbots do not reliably provide yet. Generic chatbots may generate plausible-sounding but incorrect and often inconsistent outputs, which could lead to errors in assessments and decision-making. Using generic chatbots often involves transferring sensitive data to external servers, so we also had legal and data custody concerns that necessitated a more controlled and tailored approach. We need to safeguard proprietary and client-sensitive information from unintentionally becoming part of a public AI model’s training data and ensure compliance with regional regulations and industry guidelines. This professional obligation underscores the importance of developing AI solutions tailored to specific professional requirements. We also needed any output from the solution to complement our staff’s expertise and how they work and be in a format that fits into our report template.

Resource requirements

Developing such a specialized AI tool involves significant investment, both in terms of technology and expertise. In our case, we have a team with a deep understanding of AI, software development (AI Team) and environmental subject matter expertise (Environmental Team) that is experienced with writing Phase 1 ESAs. Our AI Team monitors the latest industry trends and the strengths and weaknesses of various approaches. This team, dedicated to advancing the practical adoption of AI within our organization, collaborated with our Environmental Team, who worked closely with them to develop an AI-assisted solution to support the preparation of Phase 1 ESAs.

The development was iterative, incorporating feedback, testing, and real data over multiple (>20) iterations to refine the solution’s capabilities over simple to complex cases. Developing the tool involved turnkey software development, configuring Large Language Models, and integrating software services to extract data from large Ecolog reports, structure it into a usable format, and then use AI to identify relevant information based on guidance from the Environmental Team. Funding was allocated not only for technological research and development and ongoing testing and iterative improvements, but also for collaborative efforts across disciplines and departments.

During the iterative effort, the Environmental Team provided the AI Team with a number of Phase 1 ESAs of varying complexities from single 200 m² properties in smaller municipalities with dozens of records to transit hubs in Toronto where the study area could have thousands of historic records. The AI Team reviewed the Phase 1 ESAs and designed the AI-assisted solution to output data in formats compatible with our existing report templates, so it’s easy for our Environmental Team to assess and manipulate.

As many environmental practitioners know, data quality of historic environmental records is inconsistent. Depending on the data source and its age, records in an ERIS report may be incomplete. Knowing this, the team also tested the accuracy of the AI-assisted solution when there were gaps in the input data. The AI Team was able to configure the solution’s generative capabilities to address gaps where they existed.

Outcomes and capabilities

Consistent with our previous experience with AI, content generated by AI may look great until you review it in detail. Our experiments with the accuracy of AI’s generative capabilities led us to the conclusion that we cannot currently allow AI to generate text that was not already included in inputted reports. Hallucinated (i.e., false) content generated by AI was too easily missed by report writers and senior reviewers and would be a legal liability. Data gaps in reports require human interpretation and explanation. Where data gaps exist in input reports, the output was left blank by the AI-assisted solutions for the Environmental Team to interpret.

The AI-assisted solution was ultimately successful in significantly reducing the time required to process extensive data sets. Preliminary results show a reduction of approximately 50 per cent in the time spent reviewing reports. For example, from eight hours to four hours on a small site, and from 40 hours to 16 hours on a more complex site. This efficiency gain allows our staff to allocate more time to analysis and interpretation rather than data processing.

Our AI-assisted solution currently focuses on text and it cannot yet interpret drawings, figures, spreadsheets, and numbers. To do so would require integrating other AI models, which we plan on exploring. For example, the solution cannot interpret Figure 1 above, which tells a reader where and how far a record is relative to the Phase 1 property. There is a nuanced level of interpretation that experienced environmental practitioners would apply, such as assessing whether an activity is up- or down-stream of the property, the impact of local geology, presence of utilities and waterbodies, and the transport nature of contaminants associated with the records. Given the limitations of AI in understanding context and making nuanced decisions, especially when data gaps exist, human oversight remains essential.

Key takeaways and guardrails

This was a fruitful exercise on applying AI to a specialized technical application and we learned several key lessons:

- Collaboration is essential: Successful implementation of AI in specialized technical applications requires close collaboration between AI experts and subject matter experts. Real humans with real knowledge of both technical expertise and domain knowledge are critical to developing solutions that are effective and reliable. At minimum, you need one software developer with AI expertise that understands how to develop and integrate software to deliver outputs in a format that is consistent and useful for technical users and one committed technical expert that understands the subject matter.

- Quality data and iterative testing is essential: The team needs to provide high-quality data, review outputs, and provide feedback to support a rigorous iterative testing process. Subject matter experts should review outputs and provide feedback on how they intend to use them, which helps fine-tune the solution to handle real-world complexities including supporting how individual users might engage with and use the solution differently.

- Resource investment required: Developing a custom AI solution involves costs not just in technology, but also in the time and effort of cross-functional teams. Processing data is not free. Commercial entities should have commercial agreements with AI software technology providers and data providers to ensure appropriate safeguards are in place to protect proprietary and sensitive information. It should be noted that the cost to develop, integrate, and test solutions outweigh technology costs by a significant amount at the outset. Organizations need to be prepared to invest accordingly.

- Human oversight is non-negotiable: Despite the efficiencies gained through automation, AI outputs must be thoroughly reviewed by human experts. There is always a risk that AI-generated or predicted outputs may not be factual despite reading well at a surface level. As our solution and how we use it matures, we determined that junior practitioners should not rely on the solution until they prove their ability to write Phase 1 ESA reports, demonstrating their ability to exercise good professional judgement. The risk of relying solely on AI-generated content is too great, particularly when inaccuracies can lead to significant liabilities.

With our success and experience, we have a hammer (AI) looking for a nail (more tasks to automate) and are actively exploring new opportunities to apply AI in other areas of work. Each potential use case will be carefully evaluated to only validate the business case, but to also ensure it aligns with our commitment to accuracy, reliability, and professional integrity. We must be careful that AI outputs are not untrue, inaccurate, and/or difficult to validate. As professionals, we have a high standard of care when we sign and stamp our work. Implementing AI in specialized technical reporting requires significant resources, collaboration, and a cautious approach to ensure that outputs meet the high standards required in our industry. Our environmental and engineering reports remain with our clients and potentially in the public domain for many years. Our work needs to be trustworthy as we integrate new technologies, including AI.

Tom Li is the Ontario Business Operations and Business Development Manager, Daniel Weng is the AI & Digital Innovation Lead and Rachel Kim is an Environmental E.I.T. at Parsons Inc.

Note: This article originally appeared in the Spring Edition of Environment Journal, which is available in a multimedia digital format by clicking here.

Featured image credit: Getty Images